Trezor Firmware documentation

This documentation can also be found at docs.trezor.io where it is available in a HTML-built version compiled using mdBook.

Welcome to the Trezor Firmware repository. This repository is so called monorepo, it contains several different yet very related projects that together form the Trezor Firmware ecosystem.

Repository Structure

ci: Helper files, data, and scripts for the CI pipelinecommon/defs: JSON coin definitions and support tablescommon/protob: Common protobuf definitions for the Trezor protocolcommon/tools: Tools for managing coin definitions and related datacore: Trezor Core, firmware implementation for Trezor Tcrypto: Stand-alone cryptography library used by both Trezor Core and the Trezor One firmwaredocs: Assorted documentationlegacy: Trezor One firmware implementationpython: Python client library and thetrezorctlcommandstorage: NORCOW storage implementation used by both Trezor Core and the Trezor One firmwaretests: Firmware unit test suitetools: Miscellaneous build and helper scriptsvendor: Submodules for external dependencies

Contribute

See CONTRIBUTING.md.

Also please have a look at the docs, either in the docs folder or at docs.trezor.io before contributing. The misc chapter should be read in particular because it contains some useful assorted knowledge.

Security vulnerability disclosure

Please report suspected security vulnerabilities in private to [email protected], also see the disclosure section on the Trezor.io website. Please do NOT create publicly viewable issues for suspected security vulnerabilities.

Note on terminology

The key words "MUST", "MUST NOT", "REQUIRED", "SHALL", "SHALL NOT", "SHOULD", "SHOULD NOT", "RECOMMENDED", "MAY", and "OPTIONAL" in this document are to be interpreted as described in RFC 2119.

Trezor Core

Trezor Core is the second-gen firmware running on Trezor devices. It currently runs on Trezor T, Trezor Safe 3, Trezor Safe 5 and Trezor Safe 7.

Trezor Core is part of the trezor-firmware monorepo to be found on GitHub, in the core subdirectory.

Trezor Core uses MicroPython, it is a Python implementation for embedded systems, which allows us to have an application layer in Python, which makes the code significantly more readable and sustainable. This is what you find in the src folder.

Not everything is in Python though, we need to use C occasionally, usually for performance reasons. That is what embed/upymod is for. It extends MicroPython's modules with a number of our owns and serves as a bridge between C and Python codebase. Related to that, mocks contain Python mocks of those functions to improve readability and IDE functioning.

Where appropriate, we also use Rust. For example, all UI components and animations are implemented in embed/rust. Similarly to C bindings, you can find Python mocks for the Rust functions in mocks directory. Developing new features in Rust is preferred in the future.

Boot

Module src/main.py is the first one to be invoked in MicroPython. It starts the USB, initializes the wire codec and boots applications (see Apps).

Build

Building for Trezor Model One? See the legacy documentation.

New Project

Run the following to checkout the project:

git clone --recurse-submodules https://github.com/trezor/trezor-firmware.git

cd trezor-firmware

uv sync

cd core

After this you will need to install some software dependencies based on what flavor of Core you want to build. You can either build the Emulator or the actual firmware running on ARM devices. Emulator (also called unix port) is a unix version that can run on your computer. See Emulator for more information.

Existing Project

If you are building from an existing checkout, do not forget to refresh the submodules

and sync the uv environment:

git submodule update --init --recursive --force

uv sync

Uv

We use uv to install and track Python dependencies. You

need to install it, sync the packages and then use uv run for every command or

activate the uv environment before typing any commands. The commands in this section

suppose you are in a uv environment!

uv sync

source .venv/bin/activate

Build instructions for Embedded (ARM port)

First, clone the repository and initialize the submodules as defined here.

Then, you need to install all necessary requirements.

Requirements

The recommended way to control the requirements across all systems is to install nix-shell, which automatically installs all requirements in an isolated environment using the shell.nix configuration file located in the repository root.

To install nix-shell, follow the instructions here.

Once nix-shell is installed, go to the repository root and run:

nix-shell

Working with Developer Tools

If you need to work with embedded development tools such as OpenOCD, gcc-arm-embedded, gdb, etc., you can run nix-shell with the following argument to enable additional development tools:

nix-shell --arg devTools true

Manual Requirements Installation

If you prefer to install the requirements manually, look into the shell.nix file where you can find a list of requirements with versions.

Python Dependencies

All Python dependencies and packages are handled with uv. If you work in nix-shell, uv will be installed automatically. Then, you can install the dependencies and run the uv shell in the repository root.

uv sync

source .venv/bin/activate

Note: The recommended way of initializing your environment is to first run nix-shell and then initialize the uv shell within it.

Protobuf Compiler

The protocol buffer compiler protoc is needed to (unsurprisingly) compile protocol buffer files. Follow the installation instructions for your system.

Rust

Install the appropriate target with rustup:

rustup target add thumbv7em-none-eabihf # for TT

rustup target add thumbv7m-none-eabi # for T1

Building

make vendor build_boardloader build_bootloader build_firmware

Uploading

Use make upload to upload the firmware to a production device.

- For TT: Do not forget to enter bootloader on the device beforehand.

- For TS3: You will have to unlock bootloader first. Make sure to read the link in completeness for potentially unwanted effects.

Flashing

For flashing firmware to blank device (without bootloader) use make flash.

You need to have OpenOCD installed.

Building in debug mode

You can also build firmware in debug mode to see log output or run tests.

PYOPT=0 make build_firmware

To get a full debug build, use:

make build_firmware BITCOIN_ONLY=0 PYOPT=0

Use screen to enter the device's console. Do not forget to add your user to the dialout group or use sudo. Note that both the group and the tty name can differ, use ls -l /dev/tty* or ls /dev/tty* | grep usb to find out proper names on your machine.

screen /dev/ttyACM0

Build instructions for Emulator (Unix port)

Hint: Using emulator as described here is useful during firmware development. If you intend to use the emulator without modifying the firmware, you might be looking for Trezor User Env.

First clone, initialize submodules, install uv and enter the uv shell as

defined here. Do not forget you need to be in a uv shell

environment!

Dependencies

Install the required packages, depending on your operating system.

- Debian/Ubuntu:

sudo apt-get install scons libsdl2-dev libsdl2-image-dev llvm-dev libclang-dev clang

- Fedora:

sudo yum install scons SDL2-devel SDL2_image-devel clang-devel

- OpenSUSE:

sudo zypper install scons libSDL2-devel libSDL2_image-devel

- Arch:

sudo pacman -S scons sdl2 sdl2_image clang-devel

- NixOS:

There is a shell.nix file in the root of the project. Just run the following before entering the core directory:

nix-shell

- Mac OS X:

Consider using Nix. With Nix all you need to do is nix-shell.

For other users:

brew install scons sdl2 sdl2_image pkg-config llvm

- Windows: not supported yet, sorry.

Protobuf Compiler

The protocol buffer compiler protoc is needed to (unsurprisingly) compile protocol buffer files. Follow the installation instructions for your system.

Rust

You will require Rust and Cargo. The currently supported version is 1.88 nightly. The

recommended way to install both is with rustup. Make sure you

are up to date:

rustup default nightly

rustup update

The bindgen crate requires libclang for generating MicroPython FFI.

Build

Run the build with:

make build_unix # default

make build_unix TREZOR_MODEL=T2B1 # different model

Run

Now you can start the emulator:

./emu.py

The emulator has a number of interesting features all documented in the Emulator section.

Building for debugging and hacking in Emulator (Unix port)

Build the debuggable unix binary so you can attach the gdb or lldb. This removes optimizations and reduces address space randomization. Beware that this will significantly bloat the final binary and the firmware runtime memory limit HEAPSIZE may have to be increased.

make build_unix_debug

Emulator

:bulb: Hint: Using emulator as described here is useful during firmware development. If you intend to use the emulator without modifying the firmware, you might be looking for Trezor User Env.

Emulator is a unix version of Core firmware that runs on your computer.

There is neither boardloader nor bootloader and no firmware uploads. Emulator runs the current code as is it is and if you want to run some specific firmware version you need to use git for that (simply checkout the right branch/tag). Actually, maybe we should call it simulator to be precise, because it does not emulate the device in its completeness, it just runs the firmware on your host.

Emulator significantly speeds up development and has several features to help you along the way.

⚠️ Disclaimer ⚠️

This emulator is for development purposes only. It uses a pseudo random number generator, and thus no guarantee on its entropy is made. No security or hardening efforts are made here. It is, and will continue to be, intended for development purposes only. Security and hardening efforts are only made available on physical Trezor hardware.

Any other usage of the emulator is discouraged. Doing so runs the risk of losing funds.

How to run

- build the emulator

- run

emu.pyinside theuvenvironment:- either activate the

uvenvironment first, and then use./emu.py - or always use

uv run ./emu.py

- either activate the

- start the bridge:

- to initialise the bridge with emulator support, start it with

trezord-go -e 21324 - alternatively, launch the desktop suite from the command line with the argument

--bridge-dev

- to initialise the bridge with emulator support, start it with

Now you can use the emulator the same way as you use the device, for example you can use Trezor Suite, use our Python CLI tool (trezorctl), etc. Simply click to emulate screen touches.

Features

Run ./emu.py --help to see all supported command line options and shortcuts. The

sections below only list long option names and most notable features.

Debug and production mode

By default the emulator runs in debug mode. Debuglink is available (on port 21325 by default), exceptions and log output goes to console. To indicate debug mode, there is a red square in the upper right corner of Trezor screen.

To enable production mode, run ./emu.py --production, or set environment variable PYOPT=1.

Initialize with mnemonic words

In debug mode, the emulator can be pre-configured with a mnemonic phrase.

To use a specific mnemonic phrase:

./emu.py --mnemonic "such deposit very security much theme..."

When using Shamir shares, repeat the --mnemonic option:

./emu.py --mnemonic "your first share" --mnemonic "your second share" ...

To use the "all all all" seed defined in SLIP-14:

./emu.py --slip0014

./emu.py -s

Storage and Profiles

Internal Trezor's storage is emulated and stored in the /var/tmp/trezor.flash file by

default. Deleting this file is similar to calling wipe device. You can also find

/var/tmp/trezor.sdcard for SD card. Starting the emulator with -e / --erase will

delete the files beforehand.

You can specify a different location for the storage and log files via the -p /

--profile option:

./emu.py -p foobar

This will create a profile directory in your home ~/.trezoremu/foobar containing

emulator run files. Alternatively you can set a full path like so:

./emu.py -p /var/tmp/foobar

You can also set a full profile path to TREZOR_PROFILE_DIR environment variable.

Specifying -t / --temporary-profile will start the emulator in a clean temporary

profile that will be erased when the emulator stops. This is useful, e.g., for tests.

Logging

By default, emulator output goes to stdout. When silenced with --quiet, it is

redirected to ${TREZOR_PROFILE_DIR}/trezor.log. You can specify an alternate output

file with --output.

Running subcommands with the emulator

In scripts, it is often necessary to start the emulator, run a command while it is available, and then stop it. The following command runs the device test suite using the emulator:

./emu.py --command pytest ../tests/device_tests

Profiling support

Run ./emu.py --profiling, or set environment variable TREZOR_PROFILING=1, to run the

emulator with a profiling wrapper that generates statistics of executed lines.

Memory statistics

Run ./emu.py --log-memory, or set environment variable TREZOR_LOG_MEMORY=1, to dump

memory usage information after each workflow task is finished.

Run in gdb

Running ./emu.py --debugger runs emulator inside gdb/lldb.

Watch for file changes

Running ./emu.py --watch watches for file changes and reloads the emulator if any

occur. Note that this does not do rebuild, i.e. this works for MicroPython code (which

is interpreted) but if you make C changes, you need to rebuild yourself.

Print screen

Press p on your keyboard to capture emulator's screen. You will find a png screenshot

in the src directory.

Disable animation

Run ./emu.py --disable-animation, or set environment variable

TREZOR_DISABLE_ANIMATION=1 to disable all animations.

Profiling emulator with Valgrind

Sometimes, it can be helpful to know which parts of your code take most of the CPU time. Callgrind tool from the Valgrind instrumentation framework can generate profiling data for a run of Trezor emulator. These can then be visualized with KCachegrind.

Bear in mind that profiling the emulator is of very limited usefulness due to:

- different CPU architecture,

- different/mocked drivers,

- & other differences from actual hardware. Still, it might be a way to get some insight without a hardware debugger and a development board.

Valgrind also currently doesn't understand MicroPython call stack so it won't help you when your code is spending a lot of time in pure python functions that don't call out to C. It might be possible to instrument trezor-core so that Valgrind is aware of MicroPython stack frames.

Build

make build_unix_frozen TREZOR_EMULATOR_DEBUGGABLE=1 ADDRESS_SANITIZER=0

With PYOPT=0, most of the execution time is spent formatting and writing logs, so it is recommended to use

PYOPT=1 (and lose DebugLink) or get rid of logging manually.

Run

If you're using Nix, you can use Valgrind and KCachegrind packages from our shell.nix:

nix-shell --arg devTools true

Record profiling data on some device tests:

./emu.py -a --debugger --valgrind -c 'sleep 10; pytest ../../tests/device_tests/ -v --other-pytest-args...'

Open profiling data in KCachegrind (file suffix is different for each emulator process):

kcachegrind src/callgrind.out.$PID

Trezor Core event loop

The event loop is implemented in src/trezor/loop.py and forms the core of the

processing. At boot time, default tasks are started and inserted into an event queue.

Such task will usually run in an endless loop: wait for event, process event, loop back.

Application code is written with async/await constructs. Low level of the event queue

processes running coroutines via coroutine.send() and coroutine.throw() calls.

MicroPython details

MicroPython does not distinguish between coroutines, awaitables, and generators. Some

low-level constructs are using yield and yield from constructions.

async def definition marks the function as a generator, even if it does not contain

await or yield expressions. It is thus possible to see async def __iter__, which

indicates that the function is a generator.

For type-checking purposes, objects usually define an __await__ method that delegates

to __iter__. The __await__ method is never executed, however.

Low-level API

Function summary

loop.run() starts the event loop. The call only returns when there are no further

waiting tasks -- so, in usual conditions, never.

loop.schedule(task, value, deadline, finalizer, reschedule) schedules an awaitable to

be run either as soon as possible, or at a specified time (given as a deadline in

microseconds since system bootup.)

In addition, when the task finishes processing or is closed externally, the finalizer

callback will be executed, with the task and the return value (or the raised exception)

as a parameter.

If reschedule is true, the task is first cleared from the scheduled queue -- in

effect, it is rescheduled to run at a different time.

loop.close(task) removes a previously scheduled task from the list of waiting tasks

and calls its finalizer.

loop.pause(task, interface) sets the task as waiting for a particular interface:

either reading from or writing to one of the USB interfaces, or waiting for a touch

event.

Implementation details

Trezor Core runs coroutine-based cooperative multitasking, i.e., there is no preemption.

Every task is a coroutine, which means that it runs uninterrupted until it yields a

value (or, in async terms, until it awaits something). In every processing step, the

currently selected coroutine is resumed by sending a value to it (which is returned as a

result of the yield/await, or raised as an exception if it is an instance of

BaseException). The tasks then runs uninterrupted again, until it yields or exits.

A loop in loop.run() spins for as long as any tasks are waiting. Two lists of waiting

tasks exist:

-

_queueis a priority queue where the ordering is defined by real-time deadlines. In most cases, tasks are scheduled for "now", which makes them run one after another in FIFO order. It is also possible to schedule a task to run in the future. -

_pausedis a collection of tasks grouped by the interface for which they are waiting.

In each run of the loop, io.poll is called to query I/O events. If an event arrives on

an interface, all tasks waiting on that interface are resumed one after another. No

scheduled tasks in _queue can execute until the waiting tasks yield again.

At most one I/O event is processsed in this phase.

When the I/O phase is done, a task with the highest priority is popped from _queue and

resumed.

I/O wait

When no tasks are paused on a given interface, events on that interface remain in queue.

When multiple tasks are paused on the same interface, all of them receive every event. However, a waiting task receives at most one event. To receive more, it must pause itself again. Event processing is usually done in an endless loop with a pause call.

If two tasks are attempting to read from the same interface, and one of them re-pauses

itself immediately while the other doesn't (possibly due to use of loop.race, which

introduces scheduling gaps), the other task might lose some events.

For this reason, you should avoid waiting on the same interface from multiple tasks.

Syscalls

Syscalls bridge the gap between await-based application code and the coroutine-based

low-level implementation.

Every sequence of awaits will at some point boil down to yielding a Syscall

instance. (Yielding anything else is an error.) When that happens, control returns to

the event loop.

The handle(task) method is called on the result. This way the syscall gets hold of the

task object, and can schedule() or pause() it as appropriate.

As an example, consider pausing on an input event. A running task has no way to call

pause() on itself. It would need to pass a separate function as a callback.

The wait syscall can be implemented as a simple wrapper around the pause() low-level

call:

class wait(Syscall):

def __init__(self, msg_iface: int) -> None:

self.msg_iface = msg_iface

def handle(self, task: Task) -> None:

pause(task, self.msg_iface)

The __init__() method takes all the arguments of the "call", and handle() pauses the

task on the given interface.

Calling code will look like this:

event = await loop.wait(io.TOUCH)

The loop.wait(io.TOUCH) expression instantiates a new Syscall object. The argument

is passed to the constructor, and stored on the instance. The rest boils down to

event = await some_syscall_instance

which is equivalent to

event = yield from some_syscall_instance.__iter__()

The Syscall.__iter__() method yields self, returning control to the event loop. The

event loop invokes some_syscall_instance.handle(task_object). The task_object is

then set to resume when a touch event arrives.

A side-effect of this design is that it is possible to store and reuse syscall instances. That can be advantageous for avoiding unnecessary allocations.

while True:

# every run of the loop allocates a new object

event = await loop.wait(io.TOUCH)

process_event(event)

touch_source = loop.wait(io.TOUCH)

while True:

# same instance is reused

event = await touch_source

process_event(event)

High-level API

Application code should not be using any of the above low-level functions. Awaiting syscalls is the preferred method of writing code.

The following syscalls and constructs are available:

loop.sleep(delay_ms: int): Suspend execution until the given delay (in

milliseconds) elapses. Return value is the planned deadline in milliseconds since system

start.

Calling await loop.sleep(0) yields execution to other tasks, and schedules the current

task for the next tick.

loop.wait(interface): Wait indefinitely for an event on the given interface.

Return value is the event.

Upcoming code modification adds a timeout parameter to loop.wait.

loop.race(*children): Schedule each argument to run, and suspend execution until

the first of them finishes.

It is possible to specify wait timeout for loop.wait by using loop.race:

result = await loop.race(loop.wait(io.TOUCH), loop.sleep(1000))

This introduces scheduling gaps: every child is treated as a task and scheduled

to run. This means that if the child is a syscall, as in the above example, its action

is not done immediately. Instead, the wait begins on the next tick (or whenever the

newly created coroutine runs) and the sleep in the tick afterwards. When nesting

multiple races, the child races also run later.

Also, when a child task is done, another scheduling gap happens, and the parent task is scheduled to run on the next tick.

Upcoming changes may solve this in relevant cases, by inlining syscall operations.

loop.mailbox() is an unidirectional communication channel, simplification of Go

channels.

It allows to put a value in the channel using put(value) on the mailbox instance.

To retrieve the value, use await on the same mailbox instance.

loop.spawn(task): Start the task asynchronously. Return an object that allows

the caller to await its result, or shut the task down.

Example usage:

task = loop.spawn(some_background_task())

await do_something_here()

result = await task

Unlike other syscalls, loop.spawn starts the task at instantiation time. awaiting

the same loop.spawn instance a second time will immediately return the result of the

original run.

If the task is cancelled (usually by calling task.close()), the awaiter receives a

loop.TaskClosed exception.

It is also possible to register a synchronous finalizer callback via

task.set_finalizer. This is used internally to implement workflow management.

Apps

The folder src/apps/ is the place where all the user-facing features are implemented.

Each app must be registered by the register function inside the file workflow_handlers.py. This functions assigns what function should be called if some specific message was received. In other words, it is a link between the MicroPython functions and the Protobuf messages.

Example

For a user facing application you would assign the message to the module in _find_message_handler_module. This binds the message GetAddress to function get_address inside the apps.bitcoin.get_address module.

# in core/src/apps/workflow_handlers.py

# ...

def _find_message_handler_module(msg_type: int) -> str:

from trezor.enums import MessageType

# ...

if msg_type == MessageType.GetAddress:

return "apps.bitcoin.get_address"

# ...

# in core/src/apps/bitcoin/get_address.py

# ...

async def get_address(msg: GetAddress, keychain: Keychain, coin: CoinInfo) -> Address:

# ...

Testing

We have two types of tests in Core:

- Unit tests that are specific to Trezor Core.

- Common tests, which are common to both Trezor Core (Model T, Safe 3) and Legacy (Model one). Device tests belong to this category.

Core unit tests

Unit tests are placed in the core/tests/ directory.

To start them, build unix port and run the following command from core/:

make test # run all unit test

make test TESTOPTS=test_apps.bitcoin.address.py # run a specific test

Common tests

See the tests section.

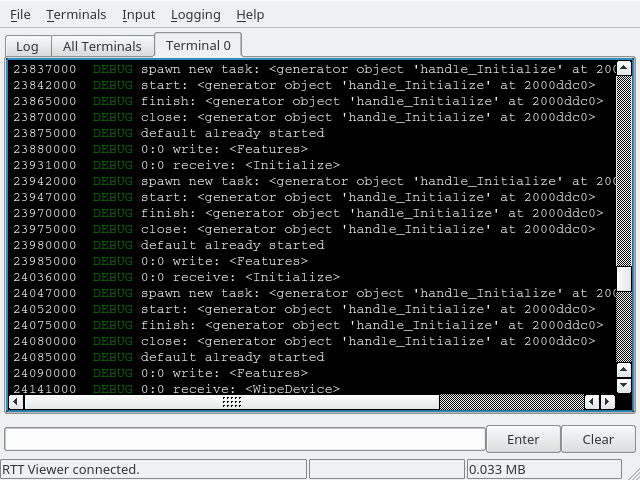

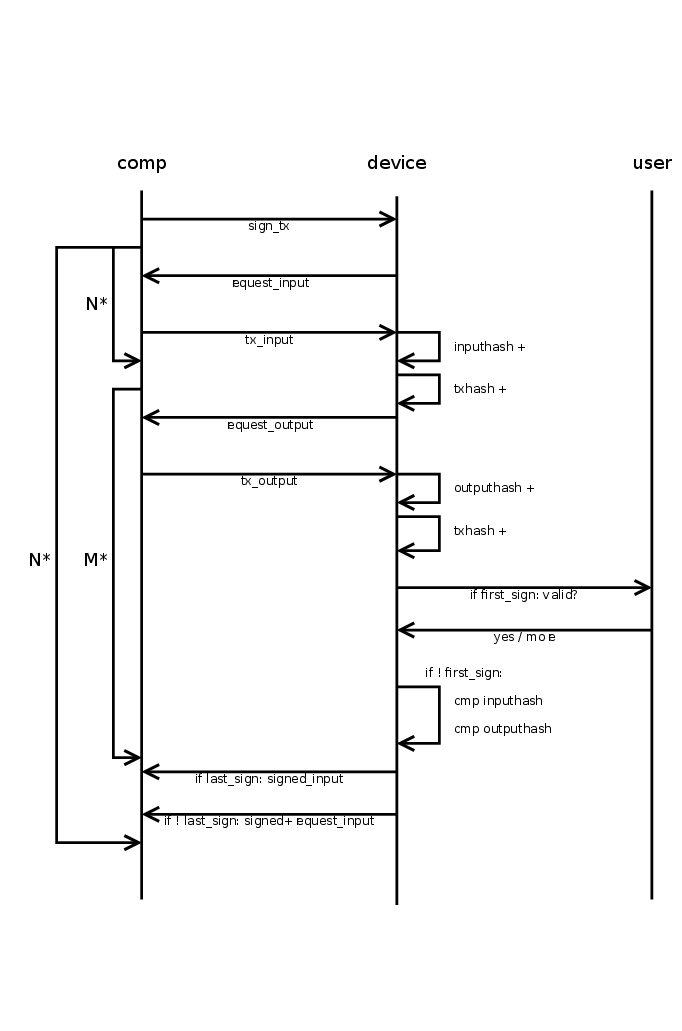

Embedded debug of firmware (C and Rust)

Notes on how to get both C and Rust debugging working "nicely".

Building properly

The #1 hassle in embedded debug is proper build because it is very easy to run out of flash space. Size optimizations on the other hand go against comfort or usability of debug.

Therefore it's usually hard to make a single profile or setting, but best way is to start with is probably these build options:

make PYOPT=0 BITCOIN_ONLY=1 V=1 VERBOSE=1 OPTIMIZE=-Og build_firmware

Options mean:

PYOPT=0- enable debuglink and testV=1 VERBOSE=1- just more of a check to see it's building with options you wantBITCOIN_ONLY=1- most of the time for C/Rust parts you don't need other coins and it saves space on flash to be usable for other than-OsoptimizationOPTIMIZE=-Og- optimization of C better suited for debug, but it will be larger than default-Os

Micropython has its own optimization setting, so if you need to step through its code as well, set it separately in its build.

Another way to save space in case build overflows flash is changing -fstack-protector-all to

-fstack-protector-strong or -fstack-protector-explicit temporarily for debugging in

SConscript.firmware.

Debug info is enabled for C and Rust in the flags and profiles (stripped when generating the .bin final image).

Putting it into debugger

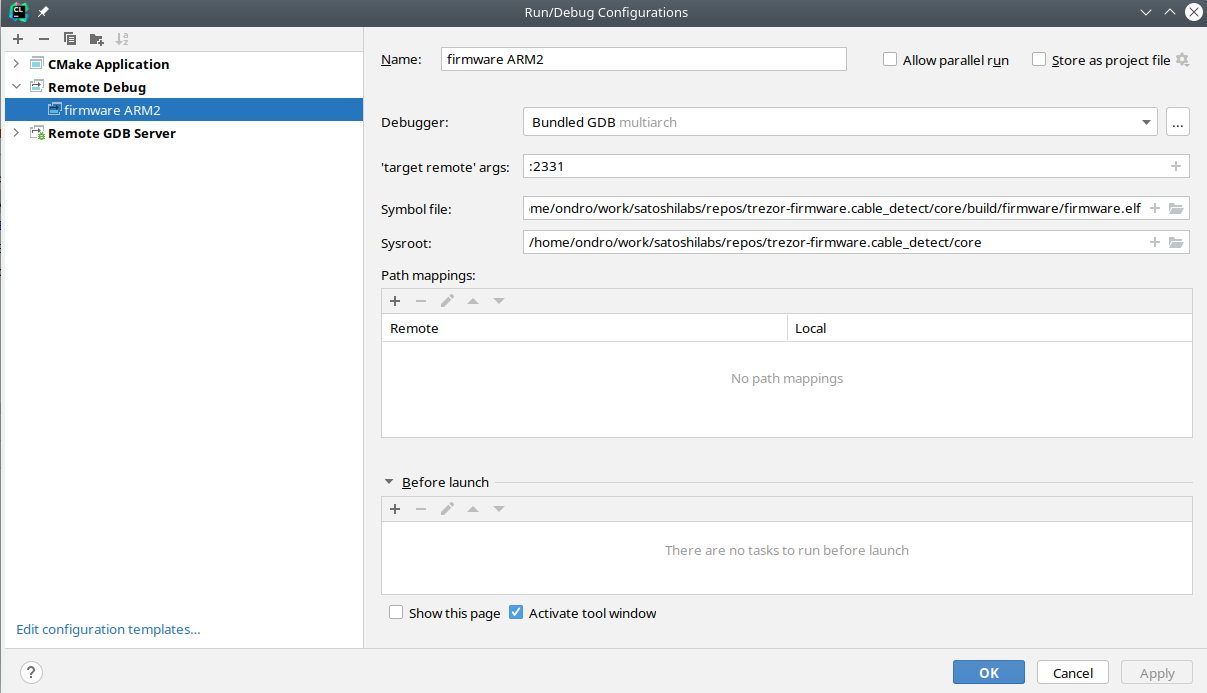

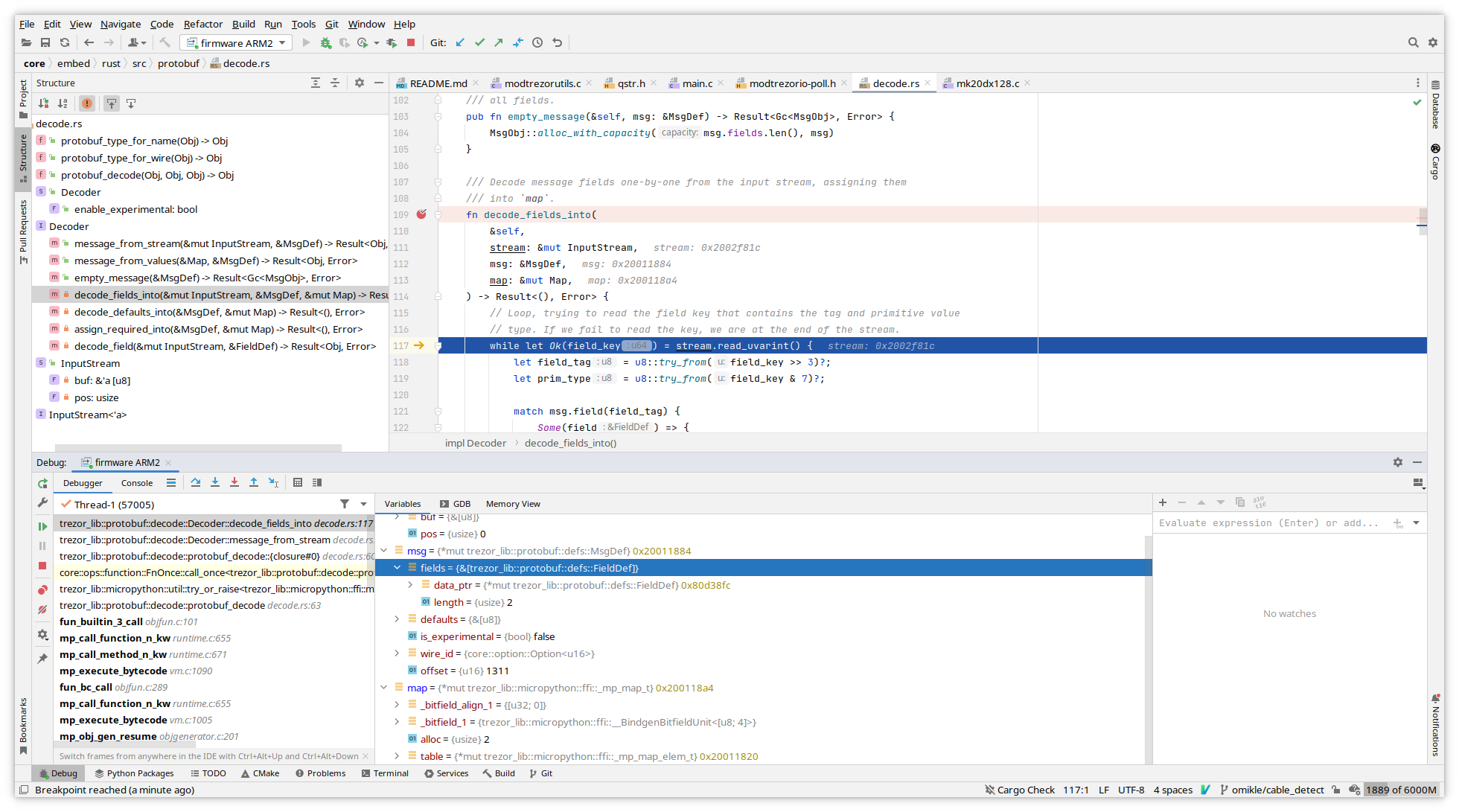

Once you have built and flashed the FW, configure debugger for remote debug.

General background into remote debug and instructions

for basic arm-none-eabi-gdb and VSCode are listed here.

Below are instructions for CLion with Rust plugin.

So far CLion seems the most complete implementation for ARM embedded debug, but these evolve quickly now.

Though all debuggers will have some historic limitations (especially some watch expressions and return values).

Start OpenOCD/JLink GDB server in a terminal

Depending on your SWD adapter, either (change speed up to 50000 depending on adapter)

JLinkGDBServerCLExe -select USB -device STM32F427VI -endian little -if SWD -speed 4000 -LocalhostOnly

or with openocd (best to use latest from git)

openocd -f interface/stlink.cfg -f target/stm32f4x.cfg

Set up a debug configuration as remote debug

Default port for "target remote" JLink GDB server is :2331, for openocd :3333

It should be also possible to use "Remote GDB Server" setting and let CLion execute openocd or JLink GDB server.

Now you can see variables from both Rust and C, set breakpoints

For pointers you can use memory view from variable's context menu.

Known limitations

Rust support is still in progress, so expect bugs sometimes.

Only way so far to get return value of function is to switch to GDB console and

use finish GDB command - unless you assign it to variable. GDB may not always show

it due to optimizations.

Not all trait info is output into debug info, so you will have issue with watching some expressions like this issue or this one.

Try not to put breakpoints on macro calls, since they may internally expand to too many addresses depending on inlining. This manifests when GDB will complain suddenly you have too many HW breakpoints or when JLink starts using flash breakpoints instead of just HW breakpoints.

Other ideas not thoroughly tested

You can define custom optimization level by choosing the -fxx options for C compiler and

similar ones for Rust with llvm-args that target LLVM passes.

Note that these change with compiler versions, LLVM 13 has

new pass manager.

The point would be to make a optimization level producing somewhat slower code, less inlining, but better debug experience.

Rust does not have equivalent of -Og level, this would be only way to make something similar.

The idea is generally to take an existing optimization level and change/remove some options that affect code size or optimize variables away, force them to stay in memory instead of registers. To look at what is used in passes you can print them out with:

llvm-as < /dev/null | opt -Oz -disable-output -debug-pass=Arguments

The -O0 level often generates too big code to fit in flash which is why this experiment

in customizing optimization level exists.

Additional notes on making CLion understand and parse code correctly

Note: Creating a project in CLion doesn't seem necessary for running debug like described above.

CLion remote debugger bindings will gather most information from debug info after connecting to external debugger (JLink or openocd GDB server), but it may be handy for general edit/completion/following definitions and so on.

Since we don't keep a CMakeLists.txt for core because everyone is using different

editor/IDE, here is a trick for creating it so that CLion will parse code without having

to run the debugger with debug info.

First, clone the repo and build both emulator and embedded code:

make build_unix

make build_embed

Now rename Makefile under core to something else, like Makefile.orig. Open the

core directory as new project in CLion.

Open any .c file, e.g. embed/projects/firmware/main.c.

At this point since CLion does not see Makefile or CMakeLists.txt, it will

suggest creating CMakeLists for you based on existing files.

Let it autogenerate one, then add following defines that are taken from build (there are more that should be added, but this suffices for most code including micropython stm32lib):

add_definitions(

-DFF_FS_READONLY=0

-DFF_FS_MINIMIZE=0

-DFF_USE_STRFUNC=0

-DFF_USE_FIND=0

-DFF_USE_FASTSEEK=0

-DFF_USE_EXPAND=0

-DFF_USE_CHMOD=0

-DFF_USE_LABEL=0

-DFF_USE_FORWARD=0

-DFF_USE_REPAIR=0

-DFF_CODE_PAGE=437

-DFF_USE_LFN=1

-DFF_LFN_UNICODE=2

-DFF_STRF_ENCODE=3

-DFF_FS_RPATH=0

-DFF_VOLUMES=1

-DFF_STR_VOLUME_ID=0

-DFF_MULTI_PARTITION=0

-DFF_USE_TRIM=0

-DFF_FS_NOFSINFO=0

-DFF_FS_TINY=0

-DFF_FS_EXFAT=0

-DFF_FS_NORTC=1

-DFF_FS_LOCK=0

-DFF_FS_REENTRANT=0

-DFF_USE_MKFS=1

-DSTM32_HAL_H=<stm32f4xx.h>

-DTREZOR_MODEL=T2T1

-DTREZOR_MODEL_T2T1=1

-DSTM32F427xx

-DUSE_HAL_DRIVER

-DSTM32_HAL_H="<stm32f4xx.h>"

-DAES_128 -DAES_192

-DRAND_PLATFORM_INDEPENDENT

-DUSE_KECCAK=1

-DUSE_ETHEREUM=1

-DUSE_MONERO=1

-DUSE_CARDANO=1

-DUSE_NEM=1

-DUSE_EOS=1

-DSECP256K1_BUILD

-DUSE_ASM_ARM

-DUSE_NUM_NONE

-DUSE_FIELD_INV_BUILTIN

-DUSE_SCALAR_INV_BUILTIN

-DUSE_EXTERNAL_ASM

-DUSE_FIELD_10X26

-DUSE_SCALAR_8X32

-DUSE_ECMULT_STATIC_PRECOMPUTATION

-DUSE_EXTERNAL_DEFAULT_CALLBACKS

-DECMULT_WINDOW_SIZE=8

-DENABLE_MODULE_GENERATOR

-DENABLE_MODULE_RANGEPROOF

-DENABLE_MODULE_RECOVERY

-DENABLE_MODULE_ECDH

-DTREZOR_FONT_BOLD_ENABLE

-DTREZOR_FONT_NORMAL_ENABLE

-DTREZOR_FONT_MONO_ENABLE

-DTREZOR_FONT_MONO_BOLD_ENABLE

)

include_directories(vendor/micropython)

include_directories(build/firmware/genhdr/)

include_directories(vendor/micropython/lib/stm32lib/STM32L4xx_HAL_Driver/Inc)

Rename the Makefile.orig back to Makefile. This is clumsy, but AFAIK there is no

explicit option to autogenerate CMakeLists.txt otherwise.

To make Rust code part of the project, right click embed/rust/Cargo.toml and

choose "Attach Cargo Project"

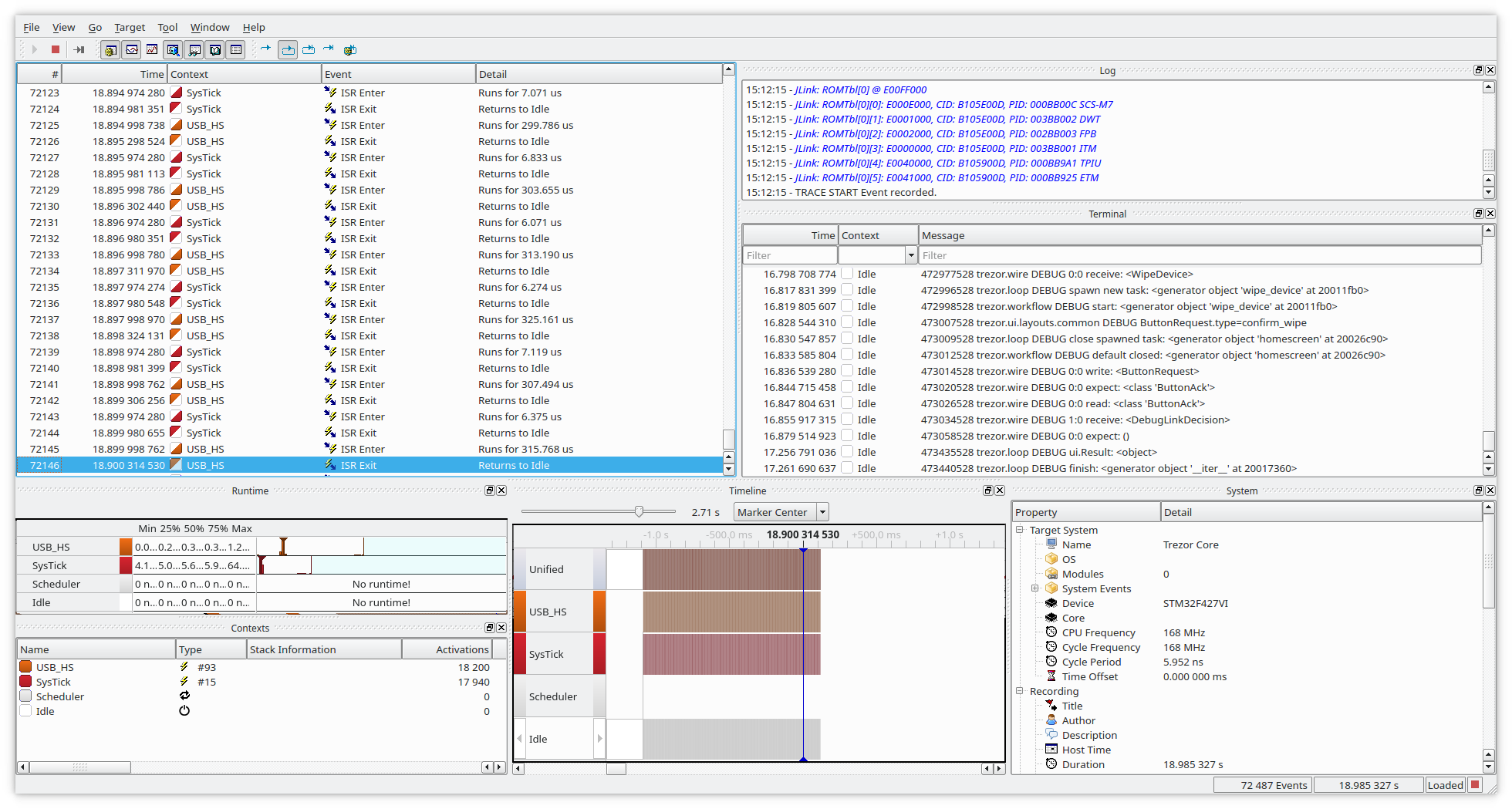

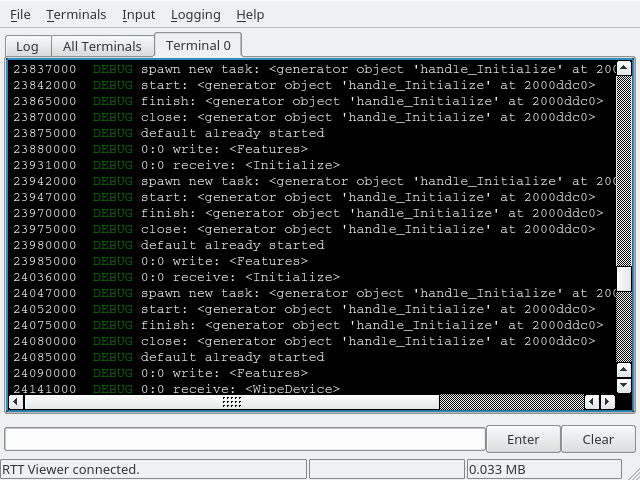

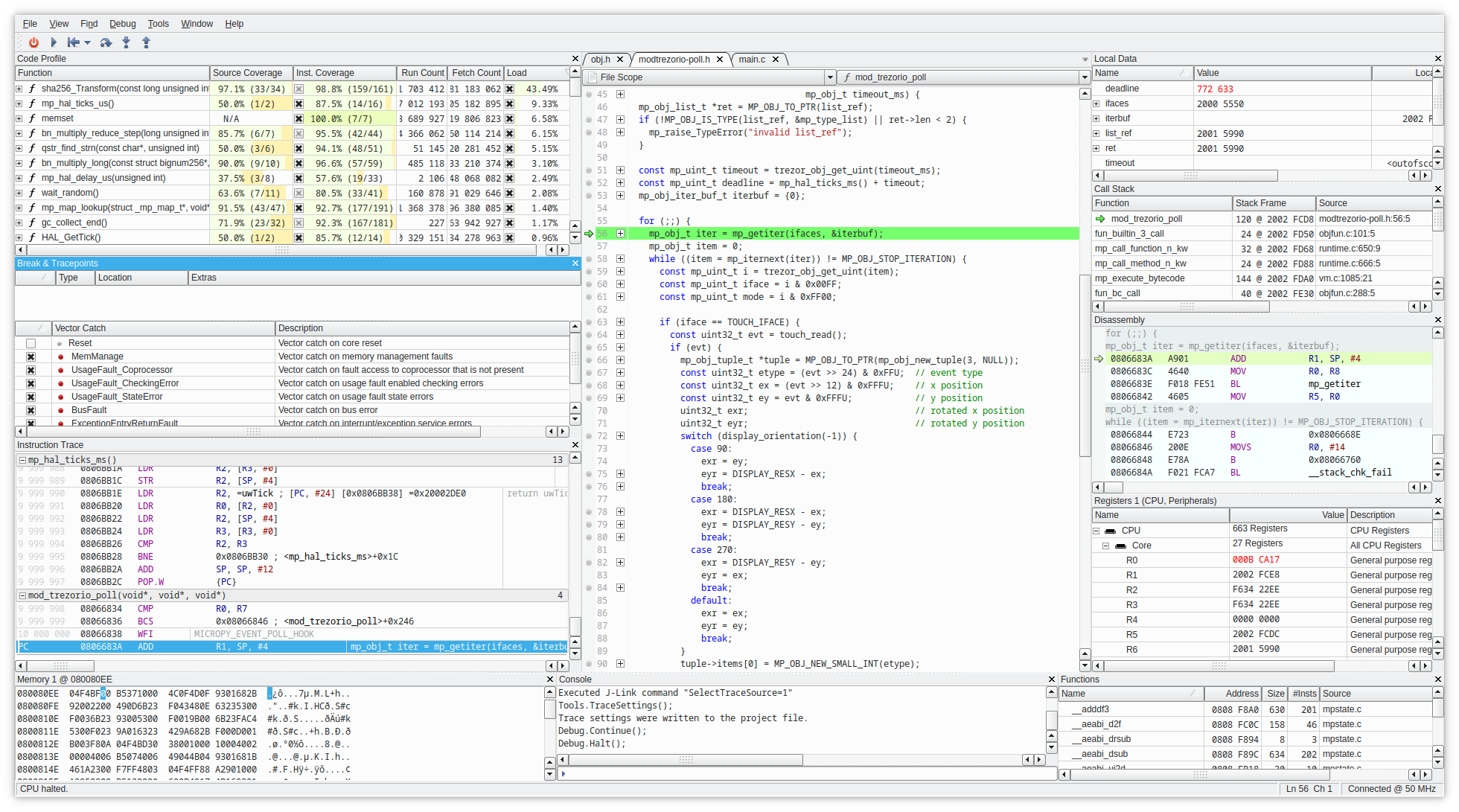

Debugging events with SystemView and Real-time Terminal (Trezor T only)

Systemview is an utility to debug interrupts or other events, counters, logs which does not require any extra pin (except the SWD pins attached to CPU).

An example showing two interrupts, and debug log passed via memory instead of UART.

Second example is RTT with color logging (kind of subset what SystemView can do, sans the colors).

Compared to UART the speed of counters or messages is enormously faster as SystemView does trick where it stores all in a small location in RAM (cyclic buffer)

This requires JLink/JTrace adapter and SystemView installed. SystemView is available as free/educational or commercial licensed.

Building with SystemView enabled

Clean build in core:

make clean

Enable SYSTEM_VIEW

Change in SConscript.firmware: PYOPT to 0 (not strictly necessary, but you won't see

debug messages otherwise, though this enables to use it also on non-debug build).

Then it's suggested to change OPTIMIZE to -Og instead of -Os which will still

does optimizations, but only a subset that does hinder debug by reordering

instructions.

PYOPT = ARGUMENTS.get('PYOPT', '0')

COPT=os.getenv('OPTIMIZE', '-Og')

Then enable the SYSTEM_VIEW feature in FEATURE_FLAGS:

FEATURE_FLAGS = {

"RDI": True,

"SECP256K1_ZKP": False,

"SYSTEM_VIEW": True,

}

Then in core/src/trezor/log.py change color to False, SystemView does not support

colorful messages (lines will be garbled), but if you want colors you can also use

Real-time terminal (RTT, see below)

set color = False

Then build with (change PYOPT or BITCOIN_ONLY as needed):

V=1 VERBOSE=1 PYOPT=0 BITCOIN_ONLY=1 SYSTEM_VIEW=1 make build_embed

After flashing with:

make flash_firmware_jlink

You should be able to conect with SystemView or RTT and collect the data and analyze them.

Sending data to RTT instead of SystemView

There are two mutually exclusive macros, first one is turned on by default and sends data to SystemView. Changing data sending to RTT is just undefining first and defining second (in theory it could be able to send to both destinations, but never tried it.)

SYSTEMVIEW_DEST_SYSTEMVIEWSYSTEMVIEW_DEST_RTT

Now when you run JLinkRTTViewerExe you should see data in the terminal:

It is possible to extend this mechanism to include multiple streams/terminals. Terminals work like a usual terminal, so you can use it in debugging also for user input.

Combining SystemView/RTT with other debug tools

In general you can use SystemView along with GDB/CLion/Ozone or other debugger at the same time, it's just advised that you keep all connections at the same frequency, otherwise it may lead to unexpected behavior, weird resets, etc.

So e.g. you can profile the interrupts, DMA in SystemView while also profiling it on instruction-level scale in Ozone:

Miscellaneous

Topics that do not fit elsewhere:

- Code style

- SLIP-39 in Core

- Exceptions usage

- Memory fragmentation management

- Running Trezor firmware on STM32F429I-DISC1

- Translation data format

- Optiga configuration

Trezor Core Boot Stages

Trezor T initialization is split into two stages. See Memory Layout for info about in which sectors each stage is stored.

First stage (boardloader) is stored in write-protected area, which means it is non-upgradable. Only second stage (bootloader) update is allowed.

First Stage - Boardloader

First stage checks the integrity and signatures of the second stage and runs it if everything is OK.

If first stage boardloader finds a valid second stage bootloader image on the SD card (in raw format, no filesystem), it will replace the internal second stage, allowing a second stage update via SD card.

The boardloader is special in that it is the device's write protected embedded code. The primary purpose for write protecting the boardloader is to make it the immutable portion that can defend against code-based attacks (e.g.- BadUSB) and bugs that would reprogram any/all of the embedded code. It assures that only verified signed embedded code is run on the device (and that the intended code is run, and not skipped). The write protection also provides some defense against attacks where the attacker has physical control of the device.

The boardloader must include an update mechanism for later stage code because if it did not, then a corruption/erasure of later stage flash memory would leave the device unusable (only the boardloader could run and it would not pass execution to a later stage that fails signature validation).

Developer note:

A microSD card can be prepared with the following. Note that the bootloader is allocated 128 KiB.

WARNING: Ensure that you want to overwrite and destroy the contents of /dev/mmcblk0 before running these commands.

Likewise, /dev/mmcblk0 may be replaced by your own specific destination.

-

sudo dd if=/dev/zero of=/dev/mmcblk0 bs=512 count=256 conv=fsync -

sudo dd if=build/bootloader/bootloader.bin of=/dev/mmcblk0 bs=512 conv=fsync

Second Stage - Bootloader

Second stage checks the integrity and signatures of the firmware and runs it if everything is OK.

If second stage bootloader detects a pressed finger on the display or there is no firmware loaded in the device, it will start in a firmware update mode, allowing a firmware update via USB.

Common notes

- Hash function used for computing data digest for signatures is BLAKE2s.

- Signature system is Ed25519 (allows combining signatures by multiple keys into one).

- All multibyte integer values are little endian.

- There is a tool called headertool.py which checks validity of the bootloader/firmware images including their headers.

Bootloader Format

Trezor Core (second stage) bootloader consists of 2 parts:

- bootloader header

- bootloader code

Bootloader Header

Total length of bootloader header is always 1024 bytes.

| offset | length | name | description |

|---|---|---|---|

| 0x0000 | 4 | magic | firmware magic TRZB |

| 0x0004 | 4 | hdrlen | length of the bootloader header |

| 0x0008 | 4 | expiry | valid until timestamp (0=infinity) |

| 0x000C | 4 | codelen | length of the bootloader code (without the header) |

| 0x0010 | 1 | vmajor | version (major) |

| 0x0011 | 1 | vminor | version (minor) |

| 0x0012 | 1 | vpatch | version (patch) |

| 0x0013 | 1 | vbuild | version (build) |

| 0x0014 | 1 | fix_vmajor | version of last critical bugfix (major) |

| 0x0015 | 1 | fix_vminor | version of last critical bugfix (minor) |

| 0x0016 | 1 | fix_vpatch | version of last critical bugfix (patch) |

| 0x0017 | 1 | fix_vbuild | version of last critical bugfix (build) |

| 0x0018 | 8 | reserved | not used yet (zeroed) |

| 0x0020 | 32 | hash1 | hash of the first code chunk (128 - 1 KiB), this excludes the header |

| 0x0040 | 32 | hash2 | hash of the second code chunk (128 KiB), zeroed if unused |

| ... | ... | ... | ... |

| 0x0200 | 32 | hash16 | hash of the last possible code chunk (128 KiB), zeroed if unused |

| 0x0220 | 415 | reserved | not used yet (zeroed) |

| 0x03BF | 1 | sigmask | SatoshiLabs signature indexes (bitmap) |

| 0x03C0 | 64 | sig | SatoshiLabs aggregated signature of the bootloader header |

Firmware Format

Trezor Core firmware consists of 3 parts:

- vendor header

- firmware header

- firmware code

Vendor Header

Total length of vendor header is 84 + 32 * (number of pubkeys) + (length of vendor string rounded up to multiple of 4) + (length of vendor image) bytes rounded up to the closest multiple of 512 bytes.

| offset | length | name | description |

|---|---|---|---|

| 0x0000 | 4 | magic | firmware magic TRZV |

| 0x0004 | 4 | hdrlen | length of the vendor header (multiple of 512) |

| 0x0008 | 4 | expiry | valid until timestamp (0=infinity) |

| 0x000C | 1 | vmajor | version (major) |

| 0x000D | 1 | vminor | version (minor) |

| 0x000E | 1 | vsig_m | number of signatures needed to run the firmware from this vendor |

| 0x000F | 1 | vsig_n | number of different pubkeys vendor provides for signing |

| 0x0010 | 2 | vtrust | level of vendor trust (bitmap) |

| 0x0012 | 14 | reserved | not used yet (zeroed) |

| 0x0020 | 32 | vpub1 | vendor pubkey 1 |

| ... | ... | ... | ... |

| ? | 32 | vpubn | vendor pubkey n |

| ? | 1 | vstr_len | vendor string length |

| ? | ? | vstr | vendor string |

| ? | ? | vstrpad | padding to a multiple of 4 bytes |

| ? | ? | vimg | vendor image (120x120 pixels in TOIf format) |

| ? | ? | reserved | padding to an address that is -65 modulo 512 (zeroed) |

| ? | 1 | sigmask | SatoshiLabs signature indexes (bitmap) |

| ? | 64 | sig | SatoshiLabs aggregated signature of the vendor header |

Vendor Trust

Vendor trust is stored as bitmap where unset bit means the feature is active.

| bit | hex | meaning |

|---|---|---|

| 0 | 0x0001 | wait 1 second |

| 1 | 0x0002 | wait 2 seconds |

| 2 | 0x0004 | wait 4 seconds |

| 3 | 0x0008 | wait 8 seconds |

| 4 | 0x0010 | use red background instead of black one |

| 5 | 0x0020 | require user click to continue |

| 6 | 0x0040 | show vendor string (not just the logo) |

| 7 | 0x0080 | allow access to pairing secret |

| 8 | 0x0100 | disable access to pairing secret |

Bits 0 to 6 represent vendor screen settings. The wait times are additive.

Two bits are used for access to the pairing secret for historical reasons. On T2B1 only bit 7 is evaluated. On newer models, both bits 7 and 8 are evaluated.

Firmware Header

Total length of firmware header is always 1024 bytes.

| offset | length | name | description |

|---|---|---|---|

| 0x0000 | 4 | magic | firmware magic TRZF |

| 0x0004 | 4 | hdrlen | length of the firmware header |

| 0x0008 | 4 | expiry | valid until timestamp (0=infinity) |

| 0x000C | 4 | codelen | length of the firmware code (without the header) |

| 0x0010 | 1 | vmajor | version (major) |

| 0x0011 | 1 | vminor | version (minor) |

| 0x0012 | 1 | vpatch | version (patch) |

| 0x0013 | 1 | vbuild | version (build) |

| 0x0014 | 1 | fix_vmajor | version of last critical bugfix (major) |

| 0x0015 | 1 | fix_vminor | version of last critical bugfix (minor) |

| 0x0016 | 1 | fix_vpatch | version of last critical bugfix (patch) |

| 0x0017 | 1 | fix_vbuild | version of last critical bugfix (build) |

| 0x0018 | 8 | reserved | not used yet (zeroed) |

| 0x0020 | 32 | hash1 | hash of the first code chunk excluding both the firmware and the vendor header (128 - 1 - [vendor header length] KiB) |

| 0x0040 | 32 | hash2 | hash of the second code chunk (128 KiB), zeroed if unused |

| ... | ... | ... | ... |

| 0x0200 | 32 | hash16 | hash of the last possible code chunk (128 KiB), zeroed if unused |

| 0x0220 | 415 | reserved | not used yet (zeroed) |

| 0x03BF | 1 | sigmask | vendor signature indexes (bitmap) |

| 0x03C0 | 64 | sig | vendor aggregated signature of the firmware header |

Trezor Core coding style

Python coding style

Run make pystyle from repository root to perform all style checks and auto-format

where possible.

General style notes

See rules for exceptions in the Exceptions documentation.

Type annotations

We prefer Python 3.10 style annotations:

- instead of

List[int], uselist[int], ditto forTuple,DictandSet - instead of

Optional[int], useint | None - instead of

Union[int, str], useint | str

This also applies inside if TYPE_CHECKING branches.

[!NOTE] For existing Python code, for example in

trezorlib, style checkers will complain that newer Python is required for the preferred annotation style. To avoid this issue, usefrom __future__ import annotations.

Type-checking imports

At run-time, the typing module is not available. There is compile-time magic that

removes all from typing imports and contents of if TYPE_CHECKING branches.

It is important to put typing-only imports into if TYPE_CHECKING, to make sure that

these modules are not needlessly pulled in at run-time.

Due to the compile-time magic, it is always possible to put a from typing import

at top level. The style for doing that are as follows:

- If the module needs to import other modules, create type aliases, TypeVars or

Protocols, the only top-level import should be

TYPE_CHECKING. Everything else (including other items fromtypingmodule) should be imported in theTYPE_CHECKINGbranch:from typing import TYPE_CHECKING if TYPE_CHECKING: from typing import Any, TypeVar, Union from trezor.messages import SomeMessage TypeAlias = Union[int, str] T = TypeVar("T") - If the module only needs items from

typing, you should not create aTYPE_CHECKINGbranch, and instead import all required items on top level:from typing import Any, Iterator, Sequence

Tools

Configurations of specific Python style tools (isort, flake8, pylint) can be found

in root setup.cfg.

Formatting

We are auto-formatting code with black and use the black code

style.

We use isort to organize imports.

Linting

We use flake8 lints, disabling only those that conflict with black code style.

We use a select subset of pylint checks that are hard-enforced.

Type checking

We use pyright for type-checking. The codebase is fully type-checked, except for

the Monero app (as of 2022-01).

C coding style

Formatting is done by clang-format. We are using the Google code

style.

Run make cstyle from repository root to auto-format.

Rust coding style

Formatting is done by rustfmt. We are using the Rust

style.

Run make ruststyle from repository root to auto-format.

Trezor firmware on STM32F429I-DISC1

The STM32F429I-DISC1 evaluation board has similar MCU to Trezor Model T as well as compatible touchscreen.

On the board, mini-USB is used to flash the firmware using the integrated ST-Link, as well as power the board. The micro-USB connector is used by the firmware to communicate with the trezor client. I.e. normally you need both cables connected.

Make sure JP1, JP2, JP3, and CN4 are fitted with jumpers (board is in this state by default).

Building and flashing

Follow the normal build instructions however pass

TREZOR_MODEL=DISC1 to make:

# build firmware images

make build_boardloader build_bootloader build_firmware TREZOR_MODEL=DISC1

# use openocd to flash everything through st-link

make flash

Reset board after command finishes.

DISC 2

DISC2 is an evaluation board STM32U5G9J used for firmware development of Trezor models with the STM32U5.

The kit has accessible pins, a display, and an embedded ST-Link.

To build and flash firmware to the DISC2 target, follow these instructions:

- Compile the firmware for the target with TREZOR_MODEL=DISC2 and BOOTLOADER_DEVEL=1

cd core

TREZOR_MODEL=DISC2 BOOTLOADER_DEVEL=1 make vendor build_boardloader build_bootloader build_firmware

-

Ensure that TrustZone is enabled on the DISC2 device, as explained here.

-

Connect the DISC2 ST-Link to the PC using a micro-USB cable (connector CN5).

-

Erase the DISC2 flash.

TREZOR_MODEL=DISC2 make flash_erase

- Flash the freshly compiled firmware from step 1.

TREZOR_MODEL=DISC2 make flash

- Reset the device (you may need to do this a couple of times) until it boots up.

Exceptions in Core

From version 2.3.0 we try to follow few rules about how we use exceptions. All new code MUST follow these.

Usage

You MAY use any exceptions in Core's logic. Exceptions from wire.errors SHOULD be

the final exceptions that are thrown and SHOULD NOT be caught. Note that wire.Error

is a type of exception that is intended to be sent out over the wire. It should only

be used in contexts where that behavior is appropriate.

Custom exception type hierarchies SHOULD always be derived directly from Exception. They SHOULD NOT be derived from other built-in exceptions (such as ValueError, TypeError, etc.)

Deriving a custom exception type signals an intention to catch and handle it somewhere in the code. For this reason, custom exception types SHOULD NOT be derived from wire.Error and subclasses.

Exception strings, including in internal exceptions, SHOULD only be used in cases

where the text is intended to be shown on the host. Exception strings MUST NOT

contain any sensitive information. An explanation of an internal exception MAY be

placed as a comment on the raise statement, to aid debugging. If an exception is

thrown with no arguments, the exception class SHOULD be thrown instead of a new

object, i.e., raise CustomError instead of raise CustomError().

Tl;dr

- Do not use

wire.errorsfortry-catchstatements, use other exceptions. - Use

wire.errorssolely as a way to communicate errors to the Host, do not include them somewhere deep in the stack. - Do not put sensitive information in exception's message. If you are not sure, do not

add any message and provide a comment next to the

raisestatement. - Use

raise CustomErrorinstead ofraise CustomError()if you are omitting the exception message.

Memory fragmentation management

Trezor-core memory is managed by a mark-and-sweep garbage collector. Throughout the run-time of the firmware, the memory space gets increasingly fragmented as the GC sweep is initiated at arbitrary points.

To combat fragmentation, we attempt to thoroughly clear the memory space after finishing every workflow, and keep only a limited set of modules alive at all times. These must take care to not hold external references.

Always active modules

The following modules are kept loaded at all times:

trezortrezor.utilsstoragestorage.commonstorage.cachestorage.devicestorage.fido2trezor.pin- held alive because the functionshow_pin_timeoutis registered as a callback fortrezorconfigand storage unlock operationsusb

The above modules are only allowed to import C modules (trezorconfig, trezorutils,

trezorcrypto, etc.) or each other. We currently do not have any automation to enforce

this, so please be careful when editing them.

Presizing

To save storage, Micropython only preallocates 1 slot in a module dict. Most of our modules use more slots than that. This means that the dict is reallocated, possibly several times. This is inconvenient at most times, but especially undesirable when it would happen to an always-active module at some point at run-time. The allocator would put the newly reallocated dict somewhere in the middle of the GC arena, and it would stay there.

This does happen in practice: e.g., when you import trezor.strings, a new reference

strings is inserted into the trezor module.

For this reason, we call utils.presize_module on trezor and storage at first

import time. The sizes are determined empirically and it might be necessary to raise

them in the future.

The backing storage for sys.modules can also be reallocated at run-time. We configure

Micropython to preallocate 160 slots in mpconfigport.h variable

MICROPY_LOADED_MODULES_DICT_SIZE. This is asserted at the end of unimport in

trezor.utils, so if we ever need more modules than that, the test suite should catch

it.

Presizing is also applied to __main__ module by setting MICROPY_MAIN_DICT_SIZE.

Top-level and function-local imports

In order to keep the imported image size in check, in certain places we avoid importing something at top-level, and instead import it in a function which actually needs the functionality. That way the module can be imported without immediately pulling in all of its possible dependencies.

The following imports trezor.ui at import time - when importing module, trezor.ui

is always imported, regardless of whether anyone calls the function draw_foo:

# module.py

import trezor.ui

def draw_foo():

trezor.ui.display.draw_text("Foo")

The following defers the import until the function is called:

# module.py

def draw_foo():

import trezor.ui

trezor.ui.display.draw_text("Foo")

The general rules of thumb are as follows:

C modules can always be imported.

These do not take any space in RAM.

Always-active modules can always be imported.

They are always active, so we do not need to worry about allocating.

In apps.*, we prefer clarity over optimization.

It might still be useful to, e.g., avoid importing trezor.ui.layouts for operations

that are sometimes silent, but it is not too important. All of the application code is

scrubbed from memory when the workflow exits.

In system modules, we are extra careful.

This means apps.base, apps.common, and everything outside the apps namespace.

A module should only import on top-level if the import is either:

- C module or an always active module,

- a module that is expected to already be imported when this module is loaded

(this is often the case in

apps.common-- e.g.,trezor.workflowis not always active, but is presumed active as soon assessionis up), - small module without further dependencies,

- something without which the whole module doesn't make sense (this is usually the case

with layout code:

apps.common.confirmdoesn't make sense without importingtrezor.ui)

Avoid importing trezor.ui.

The trezor.ui namespace is one of the largest in the codebase, not counting

application code. Importing the trezor.ui module alone is not a big problem, but

pulling in anything from trezor.ui.layouts or trezor.ui.components usually means

loading the full UI machinery. We only want to do that if we are sure that whoever is

importing us is going to be drawing things.

Trezor T Memory Layout

Flash

| sector | range | size | function |

|---|---|---|---|

| Sector 0 | 0x08000000 - 0x08003FFF | 16 KiB | boardloader (1st stage) (write-protected) |

| Sector 1 | 0x08004000 - 0x08007FFF | 16 KiB | boardloader (1st stage) (write-protected) |

| Sector 2 | 0x08008000 - 0x0800BFFF | 16 KiB | boardloader (1st stage) (write-protected) |

| Sector 3 | 0x0800C000 - 0x0800FFFF | 16 KiB | unused |

| Sector 4 | 0x08010000 - 0x0801FFFF | 64 KiB | storage area #1 |

| Sector 5 | 0x08020000 - 0x0803FFFF | 128 KiB | bootloader (2nd stage) |

| Sector 6 | 0x08040000 - 0x0805FFFF | 128 KiB | firmware |

| Sector 7 | 0x08060000 - 0x0807FFFF | 128 KiB | firmware |

| Sector 8 | 0x08080000 - 0x0809FFFF | 128 KiB | firmware |

| Sector 9 | 0x080A0000 - 0x080BFFFF | 128 KiB | firmware |

| Sector 10 | 0x080C0000 - 0x080DFFFF | 128 KiB | firmware |

| Sector 11 | 0x080E0000 - 0x080FFFFF | 128 KiB | firmware |

| Sector 12 | 0x08100000 - 0x08103FFF | 16 KiB | unused |

| Sector 13 | 0x08104000 - 0x08107FFF | 16 KiB | unused |

| Sector 14 | 0x08108000 - 0x0810BFFF | 16 KiB | unused |

| Sector 15 | 0x0810C000 - 0x0810FFFF | 16 KiB | unused |

| Sector 16 | 0x08110000 - 0x0811FFFF | 64 KiB | storage area #2 |

| Sector 17 | 0x08120000 - 0x0813FFFF | 128 KiB | firmware extra |

| Sector 18 | 0x08140000 - 0x0815FFFF | 128 KiB | firmware extra |

| Sector 19 | 0x08160000 - 0x0817FFFF | 128 KiB | firmware extra |

| Sector 20 | 0x08180000 - 0x0819FFFF | 128 KiB | firmware extra |

| Sector 21 | 0x081A0000 - 0x081BFFFF | 128 KiB | firmware extra |

| Sector 22 | 0x081C0000 - 0x081DFFFF | 128 KiB | firmware extra |

| Sector 23 | 0x081E0000 - 0x081FFFFF | 128 KiB | firmware extra |

OTP

| block | range | size | function |

|---|---|---|---|

| block 0 | 0x1FFF7800 - 0x1FFF781F | 32 B | device batch: {MODEL_IDENTIFIER}-YYMMDD |

| block 1 | 0x1FFF7820 - 0x1FFF783F | 32 B | bootloader downgrade protection |

| block 2 | 0x1FFF7840 - 0x1FFF785F | 32 B | vendor keys lock |

| block 3 | 0x1FFF7860 - 0x1FFF787F | 32 B | entropy/randomness |

| block 4 | 0x1FFF7880 - 0x1FFF789F | 32 B | device variant information |

| block 5 | 0x1FFF78A0 - 0x1FFF78BF | 32 B | unused |

| block 6 | 0x1FFF78C0 - 0x1FFF78DF | 32 B | unused |

| block 7 | 0x1FFF78E0 - 0x1FFF78FF | 32 B | unused |

| block 8 | 0x1FFF7900 - 0x1FFF791F | 32 B | unused |

| block 9 | 0x1FFF7920 - 0x1FFF793F | 32 B | unused |

| block 10 | 0x1FFF7940 - 0x1FFF795F | 32 B | unused |

| block 11 | 0x1FFF7960 - 0x1FFF797F | 32 B | unused |

| block 12 | 0x1FFF7980 - 0x1FFF799F | 32 B | unused |

| block 13 | 0x1FFF79A0 - 0x1FFF79BF | 32 B | unused |

| block 14 | 0x1FFF79C0 - 0x1FFF79DF | 32 B | unused |

| block 15 | 0x1FFF79E0 - 0x1FFF79FF | 32 B | unused |

RAM

| region | range | size | function |

|---|---|---|---|

| CCMRAM | 0x10000000 - 0x1000FFFF | 64 KiB | Core Coupled Memory |

| SRAM1 | 0x20000000 - 0x2001BFFF | 112 KiB | General Purpose SRAM |

| SRAM2 | 0x2001C000 - 0x2001FFFF | 16 KiB | General Purpose SRAM |

| SRAM3 | 0x20020000 - 0x2002FFFF | 64 KiB | General Purpose SRAM |

TrustZone

New Trezor models are built on the STM32U5 series microcontrollers, which are based on the ARM Cortex-M33 and provide advanced security features, such as TrustZone.

When building firmware for such a device (Blank Trezor device or DISC2 evaluation kit), you need to ensure that TrustZone is enabled in the STM32 microcontroller’s option bytes.

Enable TrustZone in STM32 Option Bytes

-

Download and install STM32CubeProgrammer.

-

Connect the device via ST-Link (DISC2 has an embedded ST-Link; for Trezor devices, use an external one).

-

Power on the device (connect via USB).

-

Open STM32CubeProgrammer and connect to the device.

-

Open the Option Bytes (OB) tab.

-

In the User Configuration tab, enable TZEN, then press Apply.

-

In the Boot Configuration tab, change the SECBOOTADD0 address to 0x0C004000, then press Apply.

-

Disconnect the ST-Link and reset the device.

Use of SLIP-39 in trezor-core

SLIP-39 describes a way to securely back up a secret value using Shamir's Secret Sharing scheme.

The secret value, called a Master Secret (MS) in SLIP-39 terminology, is first encrypted by a passphrase, producing an Encrypted Master Secret (EMS). The EMS is then split into a number of shares, which are encoded as a set of mnemonic words. Afterwards, it is possible to recombine some or all of the shares to obtain back the EMS, and when the correct passphrase is provided, decrypt the original Master Secret.

This does not quite match Trezor's use of the "passphrase protection" feature, namely that any passphrase is valid, and using any passphrase will yield a working wallet.

SLIP-39 enables this usage by specifying that passphrases are not validated in any way. Decrypting an EMS with any passphrase will produce data usable as the Master Secret, regardless of whether it is the original data or not.

Seed handling in Trezor

Trezor stores a mnemonic secret in a storage field _MNEMONIC_SECRET. This is the

input for the root node derivation process: mnemonic.get_seed(passphrase) takes the

user-provided passphrase as an argument, and derives the appropriate root node from the

mnemonic secret.

With BIP-39, the recovery phrase itself is the mnemonic secret. During device

initialization, the raw recovery phrase is given to the user, and also directly stored

in the _MNEMONIC_SECRET field. Whenever the root node is required, it is derived by

applying PBKDF2 to the mnemonic secret plus passphrase.

For SLIP-39 it is not practical to store the raw data of the recovery shares. During

device initialization, a random Encrypted Master Secret is generated and stored as

_MNEMONIC_SECRET. SLIP-39 encryption parameters (a random identifier, extendable backup

flag and an iteration exponent) are stored alongside the mnemonic secret in their own

storage fields. Whenever the root node is required, it is derived by "decrypting" the

stored mnemonic secret with the provided passphrase.

SLIP-39 implementation

The reference implementation of SLIP-39 provides the following high-level API:

generate_mnemonics(group parameters, master_secret, passphrase): Encrypt Master Secret with the provided passphrase, and split into a number of shares defined via the group parameters. Implemented using the following:encrypt(master_secret, passphrase, iteration_exponent, identifier, extendable): Encrypt the Master Secret with the given passphrase and parameters.split_ems(group parameters, identifier, extendable, iteration_exponent, encrypted_master_secret): Split the encrypted secret and encode the metadata into a set of shares defined via the group parameters.

combine_mnemonics(set of shares, passphrase): Combine the given set of shares to reconstruct the secret, then decrypt it with the provided passphrase. Implemented using the following:recover_ems(set of shares): Combine the given set of shares to obtain the encrypted master secret, identifier, extendable backup flag and iteration exponent.decrypt(encrypted_master_secret, passphrase, iteration_exponent, identifier, extendable): Decrypt the secret with the given passphrase and parameters, to obtain the original Master Secret.

Only the functions denoted in bold are implemented in trezor-core. Recovery shares

are generated with split_ems and combined with recover_ems. Passphrase decryption is

done with decrypt. There is never an original "master secret" to be encrypted, so the

encrypt function is also omitted.

Step-by-step

Device initialization

This process does not use passphrase.

- Generate the required number of random bits (128 or 256), and store as

_MNEMONIC_SECRET. - Translate the host-specified backup type to an extendable backup type and store it as

_BACKUP_TYPE. - Store the default iteration exponent

1as_SLIP39_ITERATION_EXPONENT. - The storage now contains all parameters required for seed derivation.

Seed derivation

This is the only process that uses passphrase.

- If passphrase is enabled, prompt user for passphrase. Otherwise use empty string.

- Use

slip39.decrypt(_MNEMONIC_SECRET, passphrase, _SLIP39_ITERATION_EXPONENT, _SLIP39_IDENTIFIER)to "decrypt" the root node that matches the provided passphrase.

Seed backup

This process does not use passphrase.

- Prompt user for group parameters (number of groups, number of shares per group, etc.).

- Use

slip39.split_ems(group parameters, _SLIP39_IDENTIFIER, _SLIP39_ITERATION_EXPONENT, _MNEMONIC_SECRET)to split the secret into the given number of shares.

Seed recovery

This process does not use passphrase.

- Prompt the user to enter enough shares.

- Use

slip39.recover_ems(shares)to combine the shares and get metadata. - Store the Encrypted Master Secret as

_MNEMONIC_SECRET. - Infer the backup type and store it as

_BACKUP_TYPE. - If the backup type is not extendable, then store the identifier as

_SLIP39_IDENTIFIER. - Store the iteration exponent as

_SLIP39_ITERATION_EXPONENT. - The storage now contains all parameters required for seed derivation.

Translations

Overview

Trezor stores translated strings in .json files in core/translations directory - e.g. de.json.

When no foreign-language is present, the English version is used - en.json.

Translations files contain the translated strings and also all the special font characters as a link to .json files in fonts directory. Font files are not needed for english, which uses just default/built-in ASCII characters.

Generating blobs

To generate up-to-date blobs, use python core/translations/cli.py gen - they will appear in core/translations as translations-*.bin files. The files contain information about the specific hardware model, language and device version.

Uploading blobs

To upload blobs with foreign-language translations, use trezorctl set language <blob_location> command.

To switch the language back into english, use trezorctl set language -r.

Translations blob format (v1)

| offset | length | name | description | hash |

|---|---|---|---|---|

| 0x0000 | 6 | magic | blob magic TRTR01 | |

| 0x0006 | 4 | container_len | total length (up to padding) | |

| 0x000A | 2 | header_len | header length | |

| 0x000C | 2 | header_magic | header magic TR | |

| 0x000E | 8 | language_tag | BCP 47 language tag (e.g. cs-CZ, en-US, ...) | header |

| 0x0016 | 4 | version | 4 bytes of version (major, minor, patch, build) | header |

| 0x001A | 4 | data_len | length of the raw data, i.e. translations + fonts | header |

| 0x001E | 32 | data_hash | SHA-256 hash of the data | header |

| 0x003E | header_len - 46 | ignored | reserved for forward compatibility | header |

| ? | 2 | proof_len | length of merkle proof and signature in bytes | |

| ? | 1 | proof_count | number of merkle proof items following | |

| ? | proof_count * 20 | proof | array of SHA-256 hashes | |

| ? | 1 | sig_mask | CoSi signature mask | |

| ? | 64 | sig | ed25519 CoSi signature of merkle root | |

| ? | 2 | translations_chunks_count | number of translated strings chunks | data |

| ? | 2 | 1st_translations_chunk_len | length of the 1st translated strings chunk | data |

| ? | 1st_translations_chunk_len | 1st_translations_chunk | 1st translated string chunk data | data |

| ? | 2 | 2nd_translations_chunk_len | length of the 2nd translated strings chunk | data |

| ? | 2nd_translations_chunk_len | 2nd_translations_chunk | 2nd translated string chunk data | data |

| ? | ... | ... | ... | data |

| ? | 2 | last_translations_chunk_len | last translated string chunk data | data |

| ? | last_translations_chunk_len | last_translations_chunk | length of the last translated strings chunk | data |

| ? | 2 | fonts_len | length of the font data | data |

| ? | fonts_len | fonts | font data | data |

| ? | ? | padding | 0xff bytes padding to flash sector boundary |

Translation data

Offsets refer to the strings field, up to the following offset. First offset is always 0, following offset must always be equal or greater (equal denotes empty string).

| offset | length | name | description |

|---|---|---|---|

| 0x0000 | 2 | count | number of offsets, excluding the sentinel |

| 0x0002 | 2 | offset[0] | offset of string id 0 in the strings field |

| ... | 2 | ... | |

| ? | 2 | offset[count - 1] | offset of string id count - 1 in the strings field |

| ? | 2 | offset[count] | offset past the last element |

| ? | translations_len - 2 * (count + 2) | strings | concatenation of UTF-8 strings |

Fonts

Ids must be in increasing order, offsets must be in non-decreasing order. First offset must be 0.

| offset | length | name | description |

|---|---|---|---|

| 0x0000 | 2 | count | number of items in the offset table, excluding the sentinel |

| 0x0002 | 2 | id[0] | numeric id of the first font |

| 0x0004 | 2 | offset[0] | offset of the first font in the fonts field |

| ... | ... | ... | |

| ? | ? | id[count - 1] | numeric id of the last font |

| ? | ? | offset[count - 1] | offset of the last font in the fonts field |

| ? | ? | sentinel_id | sentinel 0xffff |

| ? | ? | sentinel_offset | offset past the end of last element |

| ? | fonts | concatenation of fonts, format defined in the next section | |

| ? | 0-3 | padding | padding (any value) for alignment purposes |

Font data

The format is exactly the same as the previous table, the only difference is the interpretation of the payload.

| offset | length | name | description |

|---|---|---|---|

| 0x0000 | 2 | count | number of items in the offset table, excluding the sentinel |

| 0x0002 | 2 | id[0] | id (Unicode code point) of the first glyph |

| 0x0004 | 2 | offset[0] | offset of the first glyph in the glyphs field |

| ... | ... | ... | |

| ? | ? | id[count - 1] | id (Unicode code point) of the last glyph |

| ? | ? | offset[count - 1] | offset of the last glyph in the glyphs field |

| ? | ? | sentinel_id | sentinel 0xffff |

| ? | ? | sentinel_offset | offset past the end of last element |

| ? | glyphs | concatenation of glyph bitmaps | |

| ? | 0-3 | padding | padding (any value) for alignment purposes |

Previous versions

Translations blob format (v0)

| offset | length | name | description | hash |

|---|---|---|---|---|

| 0x0000 | 6 | magic | blob magic TRTR00 | |

| 0x0006 | 2 | container_len | total length (up to padding) | |

| 0x0008 | 2 | header_len | header length | |

| 0x000A | 2 | header_magic | header magic TR | |

| 0x000C | 8 | language_tag | BCP 47 language tag (e.g. cs-CZ, en-US, ...) | header |

| 0x0014 | 4 | version | 4 bytes of version (major, minor, patch, build) | header |

| 0x0018 | 2 | data_len | length of the raw data, i.e. translations + fonts | header |

| 0x001A | 32 | data_hash | SHA-256 hash of the data | header |

| 0x003A | header_len - 48 | ignored | reserved for forward compatibility | header |

| ? | 2 | proof_len | length of merkle proof and signature in bytes | |

| ? | 1 | proof_count | number of merkle proof items following | |

| ? | proof_count * 20 | proof | array of SHA-256 hashes |

UI Layout Lifecycle

Overview

There can be at most one UI layout running. The running layout is stored in

ui.CURRENT_LAYOUT. The value of this attribute must only be managed internally by the

layout objects themselves.

There are two kinds of layouts. The Layout class represents the normal kind of layout

which can accept user interaction or timer events. Such layout can return a result

of the interaction, retrievable from the Layout.get_result() async method. Typically,

calling code will block on an await for the result.

ProgressLayout represents loaders for long-running operations. It does not respond to

events and cannot return a result. Calling code will start the progress layout in the

background, call to it to update progress via ProgressLayout.report(), and then stop

it when done.

Python layout object lifecycle

A newly created layout object is in READY state. It does not accept events, has no background tasks, does not draw on screen.

When started, it moves into RUNNING state. It is drawn on screen (with backlight

on), accepts events, and runs background tasks. The value of ui.CURRENT_LAYOUT is set

to the running layout object.

(This implies that at most one layout can be in RUNNING state.)

Layout in RUNNING state may stop and return a result, either in response to a user interaction event (touch, button click, USB) or an internal timer firing. This moves it into a FINISHED state. It is no longer shown on screen (backlight is off unless another layout turns it on again), does not accept events, and does not run background tasks.

A layout in a FINISHED state has a result value, available for pickup by

awaiting get_result().

Stopping a layout before returning a result, or retrieving a result of a FINISHED layout, will move it back to READY state.

State transitions

+-------+ start() +-----------+ <event> +------------+

| READY | -----------> | RUNNING | ------------> | FINISHED |

+-------+ +-----------+ +------------+

^ ^ | |

| | | |

| +------- stop() -------+ |

| |

+--------------------- get_result() -------------------+

Calling start() checks if other layout is running, and if it is, stops it first. Then

it performs the setup and moves layout into RUNNING state.

(At most one layout can be in RUNNING state at one time. That means that before a layout moves to RUNNING, the previously running layout must move out.)

When layout is in RUNNING state, calling start() is a no-op. When layout is in

FINISHED state, calling start() fails an assertion.

After start() returns, the layout is in RUNNING state. It will stay in this state

until it returns a result, or is stopped.

Calling stop() on a READY or FINISHED layout is a no-op. Calling stop() on a

RUNNING layout will shut down any tasks waiting on the layout's result, and move to

READY state.

After stop() returns, the layout is not in RUNNING state and the current layout is

no longer this layout.

Awaiting get_result() will resume the lifecycle from its current stage, that is:

- in READY state, starts the layout and waits for its result

- in RUNNING state, waits for the result

- in FINISHED state, returns the result

After get_result() returns, the layout is in READY state.

All state transitions are synchronous -- so, in terms of trezor-core's cooperative multitasking, effectively atomic.

Global layout lifecycle

When Trezor boots, ui.CURRENT_LAYOUT is None. The screen backlight is on and displays

the "filled lock" welcome screen with model name.

When a layout is started, the backlight is turned on and the layout is drawn on screen.

ui.CURRENT_LAYOUT is the instance of the layout.

When a layout is stopped, the backlight is turned off and ui.CURRENT_LAYOUT is set to

None.

Between two different layouts, there is always an interval where backlight is off and

the value of ui.CURRENT_LAYOUT is None. This state may not be visible from the

outside; it is possible to synchronously go from A -> None -> B. However, there MUST

be a None inbetween in all cases.

Rust layout object lifecycle

A layout on the Rust side is represented by the trait Layout, whose event() method

returns a value of type Option<LayoutState>. If this event caused a state transition,

the new state is returned.

Layout can be in one of four states:

Initial: the layout is freshly constructed. This is never returned as a result ofevent().Attached: the layout is running. Its timers have been started and it is accepting events. The state transition carries anOption<ButtonRequest>. If set, this is the ButtonRequest that should be sent to the host, as an indication that the layout is ready.Transitioning: the layout is running, but not ready to receive events; either a transition-in or a transition-out animation is running.

The enum value carries anAttachType, indicating which direction the transition is going. If this is an outgoing transition, the runtime is supposed to pass the attach type to the next layout, so that it can properly transition-in.Done: the layout has finished running. All its timers should be stopped, and there is a return value available via thevalue()method.

We currently do not keep precise track of transitioning animations; it would be a lot of effort to factor the code properly, while the only use case is debuglink state tracking, which works well enough as-is.

Simple layouts

Layouts that are not flows (i.e., have only one screen) are implemented as Components

with a ComponentMsgObj implementation. They are wrapped in a RootComponent struct

which essentially simulates the layout lifecycle, in the following manner:

- At start, the layout is

Initial. - After processing the